you only look once (YOLO) [🚧 WIP]#

Ultralytics supports a wide range of YOLO models, from early versions like YOLOv3 to the latest YOLO11. the latest version of the acclaimed real-time object detection and image segmentation model YOLO11 models pretrained on the COCO dataset for Detection, Segmentation, and Pose Estimation. Additionally, Classification models pretrained on the ImageNet dataset are available. Tracking mode is compatible with all Detection, Segmentation, and Pose models. All Models are automatically downloaded from the latest Ultralytics release upon first use.

import cv2

import numpy as np

import matplotlib.pyplot as plt

from IPython.display import display, Image

import torch

import random

# Set random seeds for reproducibility

random.seed(42)

np.random.seed(42)

torch.manual_seed(42)

<torch._C.Generator at 0x138350bf0>

# Initialize detection model

from ultralytics import YOLO

yolo_model = YOLO("yolov8n.pt")

!yolo task=detect mode=predict model=yolov8n.pt conf=0.25 source='https://ultralytics.com/images/bus.jpg' save=True

Ultralytics 8.3.4 🚀 Python-3.12.4 torch-2.4.1 CPU (Apple M3 Max)

YOLOv8n summary (fused): 168 layers, 3,151,904 parameters, 0 gradients, 8.7 GFLOPs

Downloading https://ultralytics.com/images/bus.jpg to 'bus.jpg'...

100%|█████████████████████████████████████████| 134k/134k [00:00<00:00, 215MB/s]

image 1/1 /Users/n0man/Code/n03an.me/notebooks/va/bus.jpg: 640x480 4 persons, 1 bus, 1 stop sign, 29.8ms

Speed: 1.6ms preprocess, 29.8ms inference, 1.0ms postprocess per image at shape (1, 3, 640, 480)

Results saved to /Users/n0man/Code/n03an.me/runs/detect/predict7

💡 Learn more at https://docs.ultralytics.com/modes/predict

set up the device (mps for macOS, cuda for NVIDIA GPUs, or cpu for CPU-only inference)

device = "mps" if torch.backends.mps.is_available() else "cuda" if torch.cuda.is_available() else "cpu"

# Run detection

def detect_objects(image_path):

results = yolo_model(image_path)

# Visualize results

plt.figure(figsize=(12, 8))

plt.imshow(results[0].plot()[:, :, ::-1]) # Convert BGR to RGB

plt.title("Object Detection")

plt.axis('off')

plt.show()

# Run detection

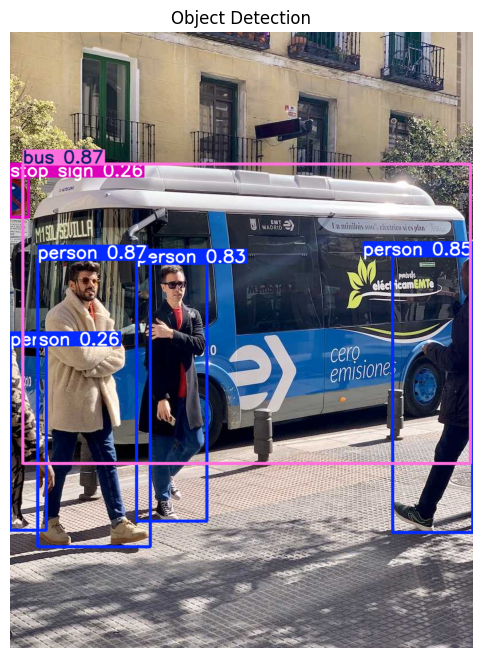

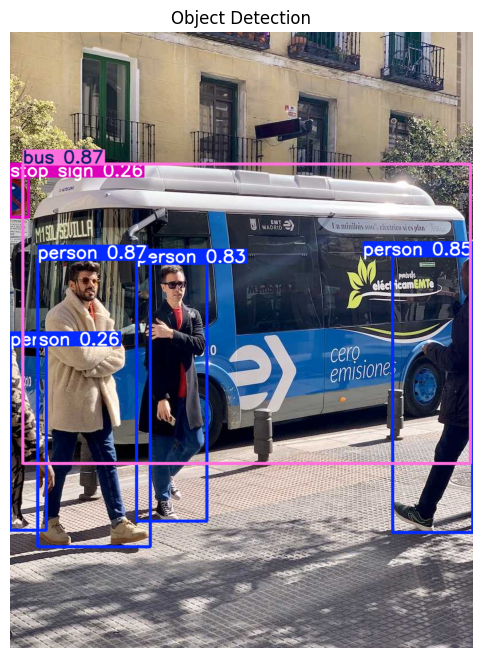

detect_objects("images/bus.jpg")

image 1/1 /Users/n0man/Code/n03an.me/notebooks/va/images/bus.jpg: 640x480 4 persons, 1 bus, 1 stop sign, 27.2ms

Speed: 1.5ms preprocess, 27.2ms inference, 0.9ms postprocess per image at shape (1, 3, 640, 480)

Detecting Events#

Will try somethin rubbish - the function detect_event takes the results from the YOLO model and checks for the presence of specific classes (like “person”) to determine interesting event. If there are more than 3 people, it flags it as “fighting”, and if there are 5 or more, it flags it as a “crowd” situation 🤦🏻♂️🤦🏻♂️🤦🏻♂️ as rubbish as it could get but you get the point…

FIRE_CLASSES = ["fire", "smoke"]

CROWD_THRESHOLD = 5

def detect_event(yolo_results):

people_count = 0

fire_detected = False

for det in yolo_results.boxes:

cls = int(det.cls[0])

label = yolo_model.names[cls]

if label == "person":

people_count += 1

elif label in FIRE_CLASSES:

fire_detected = True

fighting_detected = people_count >= 3 and not fire_detected

overcrowded = people_count >= CROWD_THRESHOLD

return fire_detected, fighting_detected, overcrowded, people_count

we will use lightweight BLIP model Salesforce/blip-image-captioning-base (possibly the one that could run on Jetson edge devies like Jetson Xavier NX??) to generate image captions.

from transformers import BlipProcessor, BlipForConditionalGeneration

from PIL import Image as PILImage

blip_processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-base")

blip_model = BlipForConditionalGeneration.from_pretrained(

"Salesforce/blip-image-captioning-base",

device_map="auto",

trust_remote_code=True,

use_safetensors=True # <--- this avoids torch.load

)

def generate_caption(frame):

pil_image = PILImage.fromarray(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

inputs = blip_processor(images=pil_image, return_tensors="pt")

inputs = {k: v.to(device) for k, v in inputs.items()}

output = blip_model.generate(**inputs, max_new_tokens=50)

caption = blip_processor.batch_decode(output, skip_special_tokens=True)[0]

return caption

/Users/n0man/Code/n03an.me/.venv/lib/python3.12/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

WARNING:torchao.kernel.intmm:Warning: Detected no triton, on systems without Triton certain kernels will not work

Using a slow image processor as `use_fast` is unset and a slow processor was saved with this model. `use_fast=True` will be the default behavior in v4.52, even if the model was saved with a slow processor. This will result in minor differences in outputs. You'll still be able to use a slow processor with `use_fast=False`.

Detecting Events and Captions…#

image_path = "images/bus.jpg"

frame = cv2.imread(image_path)

detect_objects(image_path)

results = yolo_model(frame)[0]

fire, fight, crowd, count = detect_event(results)

if fire or fight or crowd:

print("\n[EVENT DETECTED]")

if fire: print("🔥 Fire detected")

if fight: print("🔴 Possible fighting detected")

if crowd: print(f"👯👯👯👯 Overcrowding detected ({count} people)")

caption = generate_caption(frame)

print(f"Captions : {caption}")

else:

print("✅ No major event detected in the image.")

image 1/1 /Users/n0man/Code/n03an.me/notebooks/va/images/bus.jpg: 640x480 4 persons, 1 bus, 1 stop sign, 34.6ms

Speed: 2.4ms preprocess, 34.6ms inference, 0.4ms postprocess per image at shape (1, 3, 640, 480)

0: 640x480 4 persons, 1 bus, 1 stop sign, 21.7ms

Speed: 1.1ms preprocess, 21.7ms inference, 0.4ms postprocess per image at shape (1, 3, 640, 480)

[EVENT DETECTED]

🔴 Possible fighting detected

Captions : a group of people walking around a bus

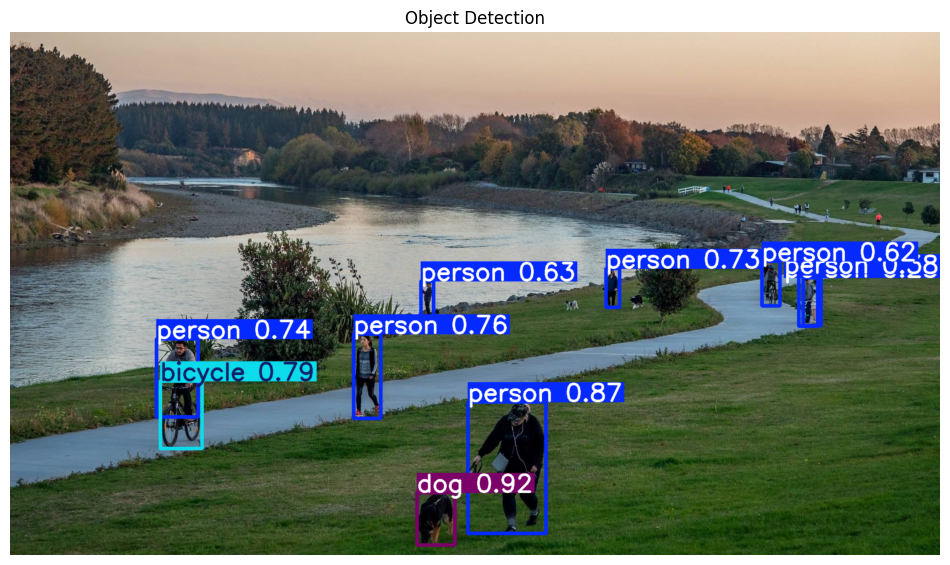

image_path = "images/park.jpg"

frame = cv2.imread(image_path)

detect_objects(image_path)

results = yolo_model(frame)[0]

fire, fight, crowd, count = detect_event(results)

if fire or fight or crowd:

print("\n[EVENT DETECTED]")

if fire: print("🔥 Fire detected")

if fight: print("🔴 Possible fighting detected")

if crowd: print(f"👯👯👯👯 Overcrowding detected ({count} people)")

caption = generate_caption(frame)

print(f"Captions : {caption}")

else:

print("✅ No major event detected in the image.")

image 1/1 /Users/n0man/Code/n03an.me/notebooks/va/images/park.jpg: 384x640 8 persons, 1 bicycle, 1 dog, 26.7ms

Speed: 1.5ms preprocess, 26.7ms inference, 0.6ms postprocess per image at shape (1, 3, 384, 640)

0: 384x640 8 persons, 1 bicycle, 1 dog, 17.2ms

Speed: 1.3ms preprocess, 17.2ms inference, 0.4ms postprocess per image at shape (1, 3, 384, 640)

[EVENT DETECTED]

🔴 Possible fighting detected

👯👯👯👯 Overcrowding detected (8 people)

Captions : a group of people walking along a river

Extracting Attributes and meta information using RT-DETR#

from ultralytics import RTDETR

# Load a COCO-pretrained RT-DETR-l model

model = RTDETR("rtdetr-l.pt")

Downloading https://github.com/ultralytics/assets/releases/download/v8.3.0/rtdetr-l.pt to 'rtdetr-l.pt'...

100%|██████████| 63.4M/63.4M [00:02<00:00, 29.4MB/s]

# Display model information (optional)

model.info()

# Train the model on the COCO8 example dataset for 100 epochs

results = model.train(data="coco8.yaml", epochs=100, imgsz=640)

# Run inference with the RT-DETR-l model on the 'bus.jpg' image

results = model("images/nemo.png")

rt-detr-l summary: 494 layers, 32,148,140 parameters, 0 gradients, 103.8 GFLOPs

New https://pypi.org/project/ultralytics/8.3.176 available 😃 Update with 'pip install -U ultralytics'

Ultralytics 8.3.4 🚀 Python-3.12.4 torch-2.4.1 CPU (Apple M3 Max)

engine/trainer: task=detect, mode=train, model=rtdetr-l.pt, data=coco8.yaml, epochs=100, time=None, patience=100, batch=16, imgsz=640, save=True, save_period=-1, cache=False, device=None, workers=8, project=None, name=train, exist_ok=False, pretrained=True, optimizer=auto, verbose=True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=10, resume=False, amp=True, fraction=1.0, profile=False, freeze=None, multi_scale=False, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save_json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, vid_stride=1, stream_buffer=False, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, embed=None, show=False, save_frames=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, show_boxes=True, line_width=None, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=True, opset=None, workspace=4, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, label_smoothing=0.0, nbs=64, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, bgr=0.0, mosaic=1.0, mixup=0.0, copy_paste=0.0, copy_paste_mode=flip, auto_augment=randaugment, erasing=0.4, crop_fraction=1.0, cfg=None, tracker=botsort.yaml, save_dir=/Users/n0man/Code/n03an.me/runs/detect/train

Dataset 'coco8.yaml' images not found ⚠️, missing path '/Users/n0man/Code/datasets/coco8/images/val'

Downloading https://ultralytics.com/assets/coco8.zip to '/Users/n0man/Code/datasets/coco8.zip'...

100%|██████████| 433k/433k [00:00<00:00, 11.8MB/s]

Unzipping /Users/n0man/Code/datasets/coco8.zip to /Users/n0man/Code/datasets/coco8...: 100%|██████████| 25/25 [00:00<00:00, 4312.64file/s]

Dataset download success ✅ (0.9s), saved to /Users/n0man/Code/datasets

WARNING ⚠️ no model scale passed. Assuming scale='l'.

from n params module arguments

0 -1 1 25248 ultralytics.nn.modules.block.HGStem [3, 32, 48]

1 -1 6 155072 ultralytics.nn.modules.block.HGBlock [48, 48, 128, 3, 6]

2 -1 1 1408 ultralytics.nn.modules.conv.DWConv [128, 128, 3, 2, 1, False]

3 -1 6 839296 ultralytics.nn.modules.block.HGBlock [128, 96, 512, 3, 6]

4 -1 1 5632 ultralytics.nn.modules.conv.DWConv [512, 512, 3, 2, 1, False]

5 -1 6 1695360 ultralytics.nn.modules.block.HGBlock [512, 192, 1024, 5, 6, True, False]

6 -1 6 2055808 ultralytics.nn.modules.block.HGBlock [1024, 192, 1024, 5, 6, True, True]

7 -1 6 2055808 ultralytics.nn.modules.block.HGBlock [1024, 192, 1024, 5, 6, True, True]

8 -1 1 11264 ultralytics.nn.modules.conv.DWConv [1024, 1024, 3, 2, 1, False]

9 -1 6 6708480 ultralytics.nn.modules.block.HGBlock [1024, 384, 2048, 5, 6, True, False]

10 -1 1 524800 ultralytics.nn.modules.conv.Conv [2048, 256, 1, 1, None, 1, 1, False]

11 -1 1 789760 ultralytics.nn.modules.transformer.AIFI [256, 1024, 8]

12 -1 1 66048 ultralytics.nn.modules.conv.Conv [256, 256, 1, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 7 1 262656 ultralytics.nn.modules.conv.Conv [1024, 256, 1, 1, None, 1, 1, False]

15 [-2, -1] 1 0 ultralytics.nn.modules.conv.Concat [1]

16 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

17 -1 1 66048 ultralytics.nn.modules.conv.Conv [256, 256, 1, 1]

18 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

19 3 1 131584 ultralytics.nn.modules.conv.Conv [512, 256, 1, 1, None, 1, 1, False]

20 [-2, -1] 1 0 ultralytics.nn.modules.conv.Concat [1]

21 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

22 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

23 [-1, 17] 1 0 ultralytics.nn.modules.conv.Concat [1]

24 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

25 -1 1 590336 ultralytics.nn.modules.conv.Conv [256, 256, 3, 2]

26 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]

27 -1 3 2232320 ultralytics.nn.modules.block.RepC3 [512, 256, 3]

28 [21, 24, 27] 1 7466252 ultralytics.nn.modules.head.RTDETRDecoder [80, [256, 256, 256]]

rt-detr-l summary: 681 layers, 32,970,476 parameters, 32,970,476 gradients, 108.3 GFLOPs

Transferred 322/941 items from pretrained weights

TensorBoard: Start with 'tensorboard --logdir /Users/n0man/Code/n03an.me/runs/detect/train', view at http://localhost:6006/

train: Scanning /Users/n0man/Code/datasets/coco8/labels/train... 4 images, 0 backgrounds, 0 corrupt: 100%|██████████| 4/4 [00:00<00:00, 2172.65it/s]

train: New cache created: /Users/n0man/Code/datasets/coco8/labels/train.cache

val: Scanning /Users/n0man/Code/datasets/coco8/labels/val... 4 images, 0 backgrounds, 0 corrupt: 100%|██████████| 4/4 [00:00<00:00, 3964.37it/s]

val: New cache created: /Users/n0man/Code/datasets/coco8/labels/val.cache

Plotting labels to /Users/n0man/Code/n03an.me/runs/detect/train/labels.jpg...

optimizer: 'optimizer=auto' found, ignoring 'lr0=0.01' and 'momentum=0.937' and determining best 'optimizer', 'lr0' and 'momentum' automatically...

optimizer: AdamW(lr=0.000119, momentum=0.9) with parameter groups 143 weight(decay=0.0), 206 weight(decay=0.0005), 226 bias(decay=0.0)

TensorBoard: model graph visualization added ✅

Image sizes 640 train, 640 val

Using 0 dataloader workers

Logging results to /Users/n0man/Code/n03an.me/runs/detect/train

Starting training for 100 epochs...

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

1/100 0G 1.284 1.097 1.412 21 640: 100%|██████████| 1/1 [00:04<00:00, 4.54s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

2/100 0G 1.377 1.018 1.074 23 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

3/100 0G 1.068 1.469 1.542 15 640: 100%|██████████| 1/1 [00:04<00:00, 4.28s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.15s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

4/100 0G 1.183 1.485 0.9249 30 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

5/100 0G 1.343 1.214 1.444 20 640: 100%|██████████| 1/1 [00:04<00:00, 4.01s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

6/100 0G 1.153 1.343 1.387 27 640: 100%|██████████| 1/1 [00:04<00:00, 4.29s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.30s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

7/100 0G 1.182 1.369 0.9188 23 640: 100%|██████████| 1/1 [00:04<00:00, 4.75s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.28s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

8/100 0G 1.073 1.679 1.09 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.49s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

9/100 0G 1.264 1.106 0.9364 34 640: 100%|██████████| 1/1 [00:04<00:00, 4.29s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.25s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

10/100 0G 1.213 1.173 1.464 22 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.27s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

11/100 0G 1.193 1.152 1.014 28 640: 100%|██████████| 1/1 [00:04<00:00, 4.11s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

12/100 0G 1.309 1.268 1.08 18 640: 100%|██████████| 1/1 [00:04<00:00, 4.29s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

13/100 0G 1.185 1.02 0.898 39 640: 100%|██████████| 1/1 [00:04<00:00, 4.31s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

14/100 0G 0.8898 2.022 1.57 10 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

15/100 0G 1.121 1.256 0.7394 32 640: 100%|██████████| 1/1 [00:04<00:00, 4.02s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.18s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

16/100 0G 1.156 1.312 1.134 25 640: 100%|██████████| 1/1 [00:04<00:00, 4.31s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

17/100 0G 1.286 0.9381 1.079 26 640: 100%|██████████| 1/1 [00:04<00:00, 4.02s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.23s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

18/100 0G 1.222 0.9205 0.9124 31 640: 100%|██████████| 1/1 [00:04<00:00, 4.07s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

19/100 0G 1.302 0.926 0.8966 40 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

20/100 0G 1.18 0.9929 1.1 16 640: 100%|██████████| 1/1 [00:04<00:00, 4.59s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.14s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

21/100 0G 1.23 1.23 1.398 15 640: 100%|██████████| 1/1 [00:04<00:00, 4.51s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.26s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

22/100 0G 0.9727 1.34 1.159 23 640: 100%|██████████| 1/1 [00:04<00:00, 4.46s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.18s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

23/100 0G 1.068 1.191 1.192 23 640: 100%|██████████| 1/1 [00:04<00:00, 4.04s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.14s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

24/100 0G 1.191 1.102 1.766 15 640: 100%|██████████| 1/1 [00:04<00:00, 4.52s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.18s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

25/100 0G 1.196 1.091 1.231 25 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.17s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

26/100 0G 1.224 0.7853 1.279 27 640: 100%|██████████| 1/1 [00:03<00:00, 3.96s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

27/100 0G 1.149 1.153 1.294 21 640: 100%|██████████| 1/1 [00:03<00:00, 4.00s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.18s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

28/100 0G 1.225 0.8267 1.087 33 640: 100%|██████████| 1/1 [00:03<00:00, 3.93s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

29/100 0G 0.951 1.319 0.8126 31 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

30/100 0G 1.16 1.034 1.211 25 640: 100%|██████████| 1/1 [00:03<00:00, 3.96s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.23s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

31/100 0G 1.103 1.012 0.7676 42 640: 100%|██████████| 1/1 [00:04<00:00, 4.43s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.28s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

32/100 0G 1.227 0.9841 1.14 20 640: 100%|██████████| 1/1 [00:04<00:00, 4.39s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.29s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

33/100 0G 0.7112 2.249 1.21 9 640: 100%|██████████| 1/1 [00:04<00:00, 4.48s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

34/100 0G 1.075 1.015 0.9163 30 640: 100%|██████████| 1/1 [00:04<00:00, 4.17s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

35/100 0G 1.088 1.078 1.118 30 640: 100%|██████████| 1/1 [00:04<00:00, 4.30s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

36/100 0G 1.044 1.221 0.7632 26 640: 100%|██████████| 1/1 [00:04<00:00, 4.53s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.18s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

37/100 0G 0.9202 1.333 0.8312 20 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.27s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

38/100 0G 0.9601 1.411 0.7987 16 640: 100%|██████████| 1/1 [00:04<00:00, 4.66s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.27s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

39/100 0G 1.083 0.8606 1.248 18 640: 100%|██████████| 1/1 [00:04<00:00, 4.66s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.23s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

40/100 0G 1.077 0.9241 0.8151 25 640: 100%|██████████| 1/1 [00:04<00:00, 4.58s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0 0 0 0

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

41/100 0G 0.9988 1.316 0.9092 23 640: 100%|██████████| 1/1 [00:04<00:00, 4.80s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00538 0.0167 0.0183 0.00415

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

42/100 0G 1.222 1.02 1.441 11 640: 100%|██████████| 1/1 [00:04<00:00, 4.28s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.33s/it]

all 4 17 0.00538 0.0167 0.0183 0.00415

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

43/100 0G 1.072 1.31 1.099 26 640: 100%|██████████| 1/1 [00:04<00:00, 4.30s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.18s/it]

all 4 17 0.00314 0.0167 0.0173 0.00224

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

44/100 0G 0.939 1.238 0.8986 19 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0.00314 0.0167 0.0173 0.00224

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

45/100 0G 1.072 0.8635 0.8733 28 640: 100%|██████████| 1/1 [00:03<00:00, 3.95s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0.00314 0.0167 0.00671 0.00209

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

46/100 0G 0.9321 1.032 0.9111 31 640: 100%|██████████| 1/1 [00:03<00:00, 3.91s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00314 0.0167 0.00671 0.00209

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

47/100 0G 1.001 1.299 1.196 20 640: 100%|██████████| 1/1 [00:04<00:00, 4.29s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00476 0.0333 0.00552 0.00116

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

48/100 0G 1.062 0.8952 0.9182 33 640: 100%|██████████| 1/1 [00:04<00:00, 4.09s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0.00476 0.0333 0.00552 0.00116

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

49/100 0G 0.971 1.328 1.072 14 640: 100%|██████████| 1/1 [00:03<00:00, 3.94s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.25s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

50/100 0G 0.8615 1.082 0.9032 21 640: 100%|██████████| 1/1 [00:04<00:00, 4.07s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

51/100 0G 0.9852 0.8619 0.7137 32 640: 100%|██████████| 1/1 [00:04<00:00, 4.07s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.17s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

52/100 0G 0.8192 1.181 0.8867 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

53/100 0G 0.94 1.22 0.8548 18 640: 100%|██████████| 1/1 [00:04<00:00, 4.39s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

54/100 0G 1.116 0.7954 1.01 29 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.26s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

55/100 0G 1.106 1.049 0.9257 25 640: 100%|██████████| 1/1 [00:04<00:00, 4.04s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

56/100 0G 1.166 1.335 1.236 12 640: 100%|██████████| 1/1 [00:04<00:00, 4.03s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.15s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

57/100 0G 0.9316 1.028 1.051 20 640: 100%|██████████| 1/1 [00:04<00:00, 4.05s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

58/100 0G 1.063 0.8656 1.032 43 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

59/100 0G 1.066 0.8185 1.023 28 640: 100%|██████████| 1/1 [00:04<00:00, 4.20s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.17s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

60/100 0G 0.9158 1.158 1.165 15 640: 100%|██████████| 1/1 [00:04<00:00, 4.16s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

61/100 0G 0.8508 0.9712 0.6642 35 640: 100%|██████████| 1/1 [00:04<00:00, 4.12s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

62/100 0G 1.112 0.646 0.7845 49 640: 100%|██████████| 1/1 [00:04<00:00, 4.54s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

63/100 0G 1.07 0.9826 0.9378 26 640: 100%|██████████| 1/1 [00:04<00:00, 4.53s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.27s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

64/100 0G 0.9423 0.9209 0.8245 29 640: 100%|██████████| 1/1 [00:04<00:00, 4.10s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

65/100 0G 0.777 1.024 0.9407 18 640: 100%|██████████| 1/1 [00:04<00:00, 4.04s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.17s/it]

all 4 17 0.00187 0.0167 0.00261 0.000261

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

66/100 0G 1.116 1.017 1.049 17 640: 100%|██████████| 1/1 [00:04<00:00, 4.01s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.0026 0.0167 0.00155 0.000155

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

67/100 0G 1.104 0.7374 0.7988 57 640: 100%|██████████| 1/1 [00:04<00:00, 4.00s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.0026 0.0167 0.00155 0.000155

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

68/100 0G 1.091 1.148 1.344 29 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.0026 0.0167 0.00155 0.000155

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

69/100 0G 1.04 0.9471 1.06 28 640: 100%|██████████| 1/1 [00:04<00:00, 4.54s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00253 0.0167 0.00154 0.000307

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

70/100 0G 0.9421 1.111 0.9506 18 640: 100%|██████████| 1/1 [00:04<00:00, 4.21s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00253 0.0167 0.00154 0.000307

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

71/100 0G 0.8869 1.072 0.7967 30 640: 100%|██████████| 1/1 [00:04<00:00, 4.19s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00253 0.0167 0.00154 0.000307

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

72/100 0G 1.016 0.8447 0.9521 31 640: 100%|██████████| 1/1 [00:04<00:00, 4.68s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

73/100 0G 0.959 0.7464 0.7875 38 640: 100%|██████████| 1/1 [00:04<00:00, 4.26s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.17s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

74/100 0G 0.8573 1.223 1.094 11 640: 100%|██████████| 1/1 [00:04<00:00, 4.20s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

75/100 0G 0.8538 1.001 0.7996 20 640: 100%|██████████| 1/1 [00:04<00:00, 4.05s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

76/100 0G 1.025 0.9374 0.9919 25 640: 100%|██████████| 1/1 [00:04<00:00, 4.04s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.16s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

77/100 0G 0.8009 0.9451 0.7806 26 640: 100%|██████████| 1/1 [00:03<00:00, 3.99s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

78/100 0G 1.073 0.9099 0.9775 42 640: 100%|██████████| 1/1 [00:04<00:00, 4.13s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.28s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

79/100 0G 0.6537 1.406 0.8425 14 640: 100%|██████████| 1/1 [00:04<00:00, 4.11s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

80/100 0G 0.8014 1.218 0.746 19 640: 100%|██████████| 1/1 [00:04<00:00, 4.11s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00249 0.0167 0.00154 0.000154

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

81/100 0G 1.006 0.8019 0.7255 35 640: 100%|██████████| 1/1 [00:04<00:00, 4.20s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

82/100 0G 0.9943 0.7726 0.7238 32 640: 100%|██████████| 1/1 [00:04<00:00, 4.77s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.25s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

83/100 0G 0.9247 1.012 0.7308 42 640: 100%|██████████| 1/1 [00:04<00:00, 4.90s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

84/100 0G 0.9576 0.8829 0.7759 24 640: 100%|██████████| 1/1 [00:04<00:00, 4.76s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.19s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

85/100 0G 0.8626 1.012 0.8213 18 640: 100%|██████████| 1/1 [00:04<00:00, 4.44s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

86/100 0G 0.7646 1.275 0.7179 16 640: 100%|██████████| 1/1 [00:04<00:00, 4.14s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.21s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

87/100 0G 0.9585 0.8869 0.815 23 640: 100%|██████████| 1/1 [00:04<00:00, 4.10s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.26s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

88/100 0G 0.9281 0.862 0.8755 17 640: 100%|██████████| 1/1 [00:04<00:00, 4.07s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

89/100 0G 0.9991 0.9142 1.257 16 640: 100%|██████████| 1/1 [00:04<00:00, 4.13s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

90/100 0G 0.9732 0.7642 0.8063 36 640: 100%|██████████| 1/1 [00:04<00:00, 4.36s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.29s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Closing dataloader mosaic

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

91/100 0G 0.6306 1.191 0.6511 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.23s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.31s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

92/100 0G 0.7359 0.9343 0.4631 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.48s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.38s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

93/100 0G 0.7088 1.273 0.8519 12 640: 100%|██████████| 1/1 [00:04<00:00, 4.27s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

94/100 0G 0.9116 1.07 0.561 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.23s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

95/100 0G 0.7572 1.154 0.795 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.08s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.26s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

96/100 0G 0.8463 0.8795 0.7818 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.26s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.31s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

97/100 0G 1.004 0.7446 1.227 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.14s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.20s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

98/100 0G 0.6773 1.103 0.4964 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.20s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.22s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

99/100 0G 0.7963 1.083 0.6276 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.13s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.24s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

Epoch GPU_mem giou_loss cls_loss l1_loss Instances Size

100/100 0G 0.6497 1.111 0.6663 13 640: 100%|██████████| 1/1 [00:04<00:00, 4.37s/it]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.27s/it]

all 4 17 0.00397 0.0167 0.00235 0.000235

100 epochs completed in 0.165 hours.

Optimizer stripped from /Users/n0man/Code/n03an.me/runs/detect/train/weights/last.pt, 66.5MB

Optimizer stripped from /Users/n0man/Code/n03an.me/runs/detect/train/weights/best.pt, 66.5MB

Validating /Users/n0man/Code/n03an.me/runs/detect/train/weights/best.pt...

Ultralytics 8.3.4 🚀 Python-3.12.4 torch-2.4.1 CPU (Apple M3 Max)

rt-detr-l summary: 502 layers, 32,148,140 parameters, 0 gradients, 103.8 GFLOPs

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 1/1 [00:01<00:00, 1.25s/it]

all 4 17 0.00538 0.0167 0.0183 0.0039

person 3 10 0.0323 0.1 0.11 0.0234

dog 1 1 0 0 0 0

horse 1 2 0 0 0 0

elephant 1 2 0 0 0 0

umbrella 1 1 0 0 0 0

potted plant 1 1 0 0 0 0

Speed: 0.3ms preprocess, 309.9ms inference, 0.0ms loss, 0.1ms postprocess per image

Results saved to /Users/n0man/Code/n03an.me/runs/detect/train

image 1/1 /Users/n0man/Code/n03an.me/notebooks/va/images/nemo.png: 640x640 4 persons, 1 bicycle, 269.2ms

Speed: 1.2ms preprocess, 269.2ms inference, 0.1ms postprocess per image at shape (1, 3, 640, 640)

results

[ultralytics.engine.results.Results object with attributes:

boxes: ultralytics.engine.results.Boxes object

keypoints: None

masks: None

names: {0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table', 61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}

obb: None

orig_img: array([[[250, 250, 250],

[250, 250, 250],

[250, 250, 250],

...,

[250, 250, 250],

[250, 250, 250],

[253, 253, 253]],

[[250, 250, 250],

[250, 250, 250],

[250, 250, 250],

...,

[250, 250, 250],

[250, 250, 250],

[253, 253, 253]],

[[250, 250, 250],

[250, 250, 250],

[250, 250, 250],

...,

[250, 250, 250],

[250, 250, 250],

[253, 253, 253]],

...,

[[250, 250, 250],

[250, 250, 250],

[250, 250, 250],

...,

[250, 250, 250],

[250, 250, 250],

[253, 253, 253]],

[[250, 250, 250],

[250, 250, 250],

[250, 250, 250],

...,

[250, 250, 250],

[250, 250, 250],

[253, 253, 253]],

[[253, 253, 253],

[253, 253, 253],

[253, 253, 253],

...,

[253, 253, 253],

[253, 253, 253],

[254, 254, 254]]], dtype=uint8)

orig_shape: (620, 512)

path: '/Users/n0man/Code/n03an.me/notebooks/va/images/nemo.png'

probs: None

save_dir: '/Users/n0man/Code/n03an.me/runs/detect/train2'

speed: {'preprocess': 1.2328624725341797, 'inference': 269.17195320129395, 'postprocess': 0.1399517059326172}]

def generate_caption(frame):

pil_image = PILImage.fromarray(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

inputs = blip_processor(images=pil_image, return_tensors="pt")

inputs = {k: v.to(device) for k, v in inputs.items()}

output = blip_model.generate(**inputs, max_new_tokens=50)

caption = blip_processor.batch_decode(output, skip_special_tokens=True)[0]

return caption

def extract_color_and_attributes(caption, cls_name):

colors = ["red", "black", "white", "yellow", "blue", "green", "orange", "pink", "gray", "brown"]

caption_lower = caption.lower()

color = "unknown"

for c in colors:

# check if color near class in caption text

if c in caption_lower and cls_name.lower() in caption_lower:

idx_color = caption_lower.find(c)

idx_cls = caption_lower.find(cls_name.lower())

if abs(idx_color - idx_cls) < 20:

color = c

break

# Example: detect attribute "white cap" for person

attributes = []

if cls_name == "person":

if "white cap" in caption_lower or "white hat" in caption_lower or "white cap" in caption_lower:

attributes.append("white cap")

elif cls_name == "bicycle":

if "folding" in caption_lower:

attributes.append("folding")

if "small wheels" in caption_lower:

attributes.append("small wheels")

return color, attributes

def detect_and_caption_objects(frame, yolo_model):

results = yolo_model(frame)[0] # assuming yolomodel returns detections in results.boxes

img_h, img_w = frame.shape[:2]

detected_objects = []

for box in results.boxes:

cls_id = int(box.cls[0])

cls_name = results.names[cls_id]

x1, y1, x2, y2 = map(int, box.xyxy[0])

crop = frame[max(0,y1):min(img_h,y2), max(0,x1):min(img_w,x2)]

caption = generate_caption(crop)

color, attributes = extract_color_and_attributes(caption, cls_name)

# Build object dict

obj = {

"type": cls_name,

"color": color,

"attributes": attributes,

"caption": caption

}

detected_objects.append(obj)

return detected_objects

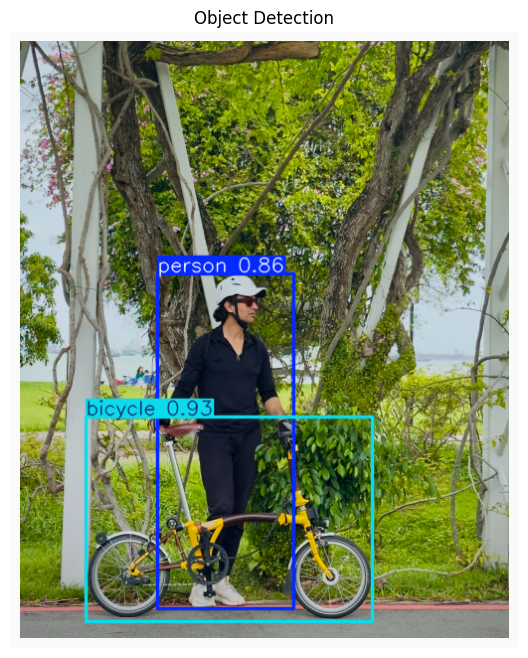

image_path = "images/nemo.png"

frame = cv2.imread(image_path)

detect_objects(image_path)

objects_with_attributes = detect_and_caption_objects(frame, yolo_model)

for obj in objects_with_attributes:

print(f"Object: {obj['type']}, Color: {obj['color']}, Attributes: {obj['attributes']}")

print(f"Caption: {obj['caption']}\n")

image 1/1 /Users/n0man/Code/n03an.me/notebooks/va/images/nemo.png: 640x544 1 person, 1 bicycle, 27.2ms

Speed: 1.7ms preprocess, 27.2ms inference, 0.3ms postprocess per image at shape (1, 3, 640, 544)

0: 640x544 1 person, 1 bicycle, 33.0ms

Speed: 1.1ms preprocess, 33.0ms inference, 0.5ms postprocess per image at shape (1, 3, 640, 544)

Object: bicycle, Color: unknown, Attributes: []

Caption: a man standing next to a yellow bike

Object: person, Color: unknown, Attributes: []

Caption: a man riding a bike on a sidewalk